Beginner’s Guide to Autonomous Intelligence in 2026

Beginner’s Guide to Autonomous Intelligence in 2026 AI ML November 19, 2025 Agentic AI and Cloud Computing: Beginner’s Guide to Autonomous Intelligence in 2025 There are two business disruptions that are leading the Industry 4.0 work processes today, and those are agentic AI and cloud computing. Cloud-native AI agents combine the autonomous, not just assistive, character of agentic AI along with the scalable infrastructure of cloud computing. This synergy of these two powerhouses could be invaluable additions to high-level corporate strategies across industries like manufacturing, legal, healthcare, retail, and non-profits. Why? Because it will drive daily operational efficiency, innovation, and competitive advantage. The Cloud-Native AI Agent Synergy To make a quick, tangible case of our argument, let us take the aspect of resilience and continuity in business processes that could benefit from an AI autonomous system implementation. We know that cloud platforms like GCP (Google Cloud Platform) and AWS (Amazon Web Services) have the capability to support distributed architecture. In simple terms, multiple backup power sources because of multi-server networks. So, when we integrate an autonomous system like agentic AI in this already superfluous workflow, we can even manage momentary and minimal disruption like a server failure. The intelligent system can not only notice it in time but also switch to a backup, different, or restarted server on its own. What is Agentic AI? Before we move any further into understanding the practical industry use cases of agentic AI and cloud computing, and how we help you deploy its combination to boost your production, let us start by covering the groundwork on agentic AI- what exactly does this buzzword mean? For a beginner’s guide, understanding that agentic AI is not just an industry buzzword but has real, positive use cases, if deployed correctly, is of paramount importance. In essence, it refers to intelligent systems that take initiative, make decisions, execute tasks- like our business continuity and resilience case stated above- without constant manual intervention. Why Cloud Computing is Essential for Agentic AI? Fundamentally, cloud computing is the delivery of computing services like servers, storage, databases, software, and AI over the internet instead of a physical storage unit. It’s like buying a subscription of Netflix- if seen as a cloud content library- to watch movies and TV shows, instead of owning physical DVDs. Apple iCloud, MS OneDrive, and Google Drive are some consumer-focused cloud storage examples. This model of scale and scope is essential for deploying AI autonomous systems which needs infrastructure and flexibility to do its job reliably and consistently, without costing an arm. How Tech360 Helps Enterprises Deploy Agentic AI? Strategy and Readiness Assessment Any tech disruption-based change creates a ripple effect of business process change, ultimately ending with culture change. At Tech360, we understand this so we start not by deployment but a structured pre-deployment phase consisted of existing workflow analysis, data maturity, possible resistance mapping, and feasibility assessments. This enables us identification of underlying gaps and develop a high-value agentic AI strategy to create maximum impact, while ensuring business and regulatory compliance. Cloud-Native AI Architecture Design Once the strategy is in place, our team begins the design process by developing a secure, scalable cloud architecture using platforms like Azure, agentic AI Google Cloud, or AWS agentic AI platform. This ensures that the architecture which is built acts as a resilient environment- with strong identity controls, distributed computing, and elastic scaling- for autonomous agents to operate efficiently, handle variable workloads, and maintain continuous uptime. Intelligent Agent Development and Integration Tech360 develops custom intelligent agents that can plan, execute, and self-optimize based on real-time data. These agents integrate with existing enterprise systems through APIs, middleware, and secure connectors, ensuring compatibility with legacy platforms such as ERP, MES, CRM, EMR, and legal document management systems. Multi-agent workflows are created for complex, cross-departmental processes where agents collaborate and delegate tasks autonomously. Deployment, Monitoring, and Governance Once developed, agents are deployed within controlled cloud environments supported by real-time monitoring dashboards, logging pipelines, and automated alerts. Tech360 establishes governance frameworks to oversee performance, reliability, and cost efficiency. Continuous improvement loops help refine agent decisions and ensure the system adapts to new data and changing business conditions. Responsible & Ethical AI Frameworks Tech360 embeds robust ethical controls into every deployment. This includes bias checks, explainability layers, access safeguards, and detailed audit trails. Comprehensive governance ensures agentic AI operates responsibly, aligns with compliance standards, and maintains transparency, enabling enterprises to trust autonomous systems at scale. Future of AI Autonomous Systems: 2026 Outlook The next decade will see enterprises move beyond single AI agents toward multi-agent ecosystems, where autonomous systems coordinate, negotiate, and execute tasks collectively. These interconnected agents will function like digital teams, handling complex, cross-functional workflows with minimal human direction. At the same time, organizations will embrace hybrid AI–human collaboration, where AI handles high-volume, analytical, or repetitive decisions while humans focus on judgment-based, creative, and strategic work. This balance will reshape job roles and elevate workforce capabilities across industries. Manufacturing, retail, and logistics will experience the rise of autonomous supply chains, where agents independently manage procurement, forecasting, routing, and inventory alignment in real time. These systems will reduce delays, optimize resource allocation, and increase profitability. Meanwhile, enterprises will rely on AI-driven intelligence for resilience and risk detection, using continuous data analysis to spot vulnerabilities, operational disruptions, and emerging threats before they escalate. Sustainability will also become a core outcome of autonomous operations. Agentic AI will optimize energy consumption, minimize waste, and create efficient resource usage patterns across facilities and processes. As these capabilities mature, autonomous enterprises will become more adaptive, efficient, and environmentally responsible- setting new global standards for how organizations operate and grow.

How AI is Transforming CI/CD in DevOps in 2026

How AI is Transforming CI/CD in DevOps in 2026 AI ML November 19, 2025 In software development, combining the development and operational processes (better known as DevOps) was considered the benchmark for rapid go-to-market releases. Why? Primarily because it operated on the core principles of continuous integration and continuous deployment (CI/CD), which automated much of traditional development workflows. However, with the recent breakthrough in machine learning and artificial intelligence in DevOps and its wide adoption, further optimizing ML/AI in CI/CD pipelines is a necessity. In software lifecycle management, automating DevOps intelligently takes care of the issues that arise when teams in large-scale systems work on delivering software and monitoring its performance continuously. Issues With Traditional CI/CD in DevOps AI in CI/CD pipelines is set to revolutionize the way software is developed, deployed, and monitored by addressing the current challenges – Reactive Testing In most software development projects, tests in the monitoring process have been performed after the deployment of code, also known as reactive testing. By then it is often too late to catch critical bugs. But with tools like GitHub and Jenkins, artificial intelligence or machine learning-based plugins can proactively predict operational or build failure, saving teams time and energy. Manual Approvals Traditional development has been heavily reliant on manual supervision in the form of periodic human checks and approvals. Even though AI processes are not yet sophisticated enough to eliminate manual interventions completely, a critical process of the development cycle can be tackled by AI. Platforms like Spinnaker and Argo CD help analyze past developments and predict future risks to inform rollout and rollback strategies in DevOps automation. Performance Bottlenecks Another roadblock to smooth and fast delivery speed and release updates is infrastructure limits. This too is taken care of by DevOps tools like Datadog and Prometheus, which use ML extensions and AI for continuous integration and delivery, keeping pipelines running smoothly. Limited Insights MLOps and CI/CD integrated dashboards do not just track and visualize performance metrics but actually tell you what is next. AI-led code analysis solutions like SonarQube and DeepCode spot new issues and identify related patterns and suggest relevant improvements. This prevents security flaws from hitting production and impacting end users. Jenkin Use Case in Intelligent DevOps Automation Everything that we talked about so far is still just words. What does artificial intelligence in DevOps and MLOps and CI/CD integration that do not just automate tasks but actually learn from your workflows to potentially self-manage without manual intervention actually look like in practice? Let us find out through the following model. What’s the Challenge? A SaaS company in a competitive landscape with huge, invested capital and ambitious business goals was struggling to keep up build cycles and timely releases. Their CI/CD pipeline took hours to complete, and they had a massive codebase. In a ripple effect, it slowed down test result analyses and feedback loops, making these delays rather costly. Challenge: Testing large codebases takes too long. What’s the Solution? The engineering team carried out an experiment by optimizing AI for continuous integration and delivery in their Jenkins pipeline for AI-driven testing. Instead of going the usual way of running tests after code changes like they were used to doing, the team tested an MLOps and CI/CD integration that could predict which tests were relevant to their latest code updates. Solution: Use AI-driven test prioritization in Jenkins. What’s the Approach? The model analyzed all the relevant factors like historical test results, commit histories, and code dependencies. This spotted high-risk areas likely to fail clearly and immediately narrowed down the scope of the project, surprisingly saving time for the team. Further, based on this insight, Jenkin was able to dynamically reorder and execute only the most impactful test cases, which, when repeatedly refined by AI, made the system self-sufficient. How It Works: ML models select only high-impact tests based on previous failures. What’s the Result? The team achieved a whopping 40% reduction in overall build times, speeding up the CI/CD process, closing feedback loops faster, identifying and resolving bug issues quicker, and releasing updates more frequently. All while maintaining high quality standards. Result: 40% reduction in build times and faster bug detection. Future Trends: MLOps and Autonomous Pipelines The past had its big revolution with the merger of development and operations. Now, we are seeing an increase in AI for continuous integration and delivery and DevOps automation practices. The truly futuristic and sustainable move, though, lies in MLOps and CI/CD integration. Here are 6 reasons why: The Merge of DevOps and MLOps The line between DevOps and machine learning operations (MLOps) is blurring fast. Teams are adopting unified pipelines that handle both application and model delivery, making it easier to deploy AI models alongside traditional code without friction. Self-Healing Pipelines Imagine a pipeline that not only detects errors but also fixes them automatically. With AI-driven diagnostics, future CI/CD systems will identify root causes, restart failed processes, or roll back changes—no human intervention required. Continuous Learning Systems Just like data models improve over time, so will the pipelines themselves. Continuous learning pipelines will use feedback loops to optimize testing, deployment, and performance tuning based on past outcomes. Predictive Resource Management Future pipelines will use AI-based analytics to forecast resource needs—like server load, build queue times, or network usage—and automatically allocate capacity before bottlenecks appear. Natural Language Interfaces for DevOps Forget complex scripts. With the rise of large language models, engineers will soon use voice or chat-based commands to trigger deployments, monitor builds, and analyze logs in real time. Fully Autonomous Delivery Pipelines The ultimate goal? Self-optimizing CI/CD pipelines that monitor their own performance, deploy updates intelligently, and evolve continuously, delivering true end-to-end automation in DevOps.

Stay Ahead of the Curve: How AI/ML Strategies Future-Proof Your Business

Stay Ahead of the Curve: How AI/ML Strategies Future-Proof Your Business AI ML October 13, 2025 This is not just another blog on machine learning which will include words like synergize and agentic AI – which is why you should read it. We work to bring evidence-based information to you so you can pick and choose your strategy – which we help you finesse. By providing real-time, no-nonsense, practical solutions, we help you stay ahead of your competitors and grow your business rapidly and sustainably. So, what will we be covering in this blog? Well, at Tech360, we help companies in manufacturing, healthcare, legal, retail, and non-profit sectors to fundamentally rethink how they work and plan — with an AI/ML strategy that turns complex data into real business outcomes. Here, we show you exactly how we do it. Why AI/ML Matters More Than Ever Let’s start with the basics. Only briefly, to cover ground. Data is the new currency—and how you use it defines your competitiveness. Many businesses collect tons of operational data, but few are actually able to use it to make predictive, real-time decisions. The value-action gap, if you may—like we see with issues of environmentalism, for instance, where enthusiasts’ words and actions do not match. Another issue with this data is often found in unreliable data collection methods, too—and working on uncompromised data is a sure-fire way of razing down your shop before you even think of re-strategizing. To bridge the gap is where enterprise AI adoption comes in. AI and ML help companies automate manual tasks, spot inefficiencies, and act faster—often before a problem even surfaces. To tackle the second issue of data collection is where highly skilled technical talent, like researchers, come in—you may employ the best automation technique but without people essential for innovation, you will run into a circular problem. In short, AI-driven process optimization is how forward-thinking organizations are scaling efficiently and building resilience. AI/ML in Action: Real Industry Impact Let’s see how AI and Machine Learning are reshaping traditional sectors: 1. Manufacturing From predictive maintenance to automated quality inspection, AI in manufacturing helps reduce downtime and improve output.Machines can now sense early wear and tear, helping maintenance teams act before breakdowns occur. 2. Healthcare Hospitals and clinics use AI in healthcare for diagnostics, patient risk prediction, and administrative automation. It not only saves doctors’ time but also improves patient outcomes through faster, data-backed insights. 3. Legal Legal firms are leveraging AI for legal firms to analyze thousands of documents in minutes. Tasks like contract review, e-discovery, and compliance tracking have become faster, more accurate, and more affordable. 4. Retail Retailers are using machine learning in retail to personalize shopping experiences, forecast demand, and manage inventory better. The result? Happier customers and healthier profit margins. 5. Non-Profit Even the non-profit world benefits from AI for non-profit organizations. Predictive analytics help forecast donor behavior, measure program impact, and optimize limited resources for maximum social good. So, Is There a Roadmap to Staying Ahead? Success with AI/ML doesn’t happen overnight. It takes clarity, planning, and the right partner. Here’s how you can start: Begin with clear goals. When you focus on a few high-impact areas where AI can deliver measurable improvement, you are better able to narrow down your broad purpose, stay on track, and get hard numbers along the way. This strategy helps you tackle all areas of focus in the long run. Build a solid data foundation. Clean, well-structured data is the backbone of every successful AI initiative, so it is essential to ensure that your data is high-quality, well-organized, and accessible. Start with an audit of existing data if you must, to rule out inconsistencies. Start small, then scale. This sort of follows from the first point- test your ideas with pilot projects, prove ROI, and expand gradually. Once a pilot project shows positive results, you can gradually expand and integrate AI solutions across your business. This iterative approach reduces risk because you are able to iron out any challenges without committing significant resources upfront. Blend domain and data expertise. Combine the knowledge of your industry, domain, and subject matter experts with AI/ML strategy specialists. Nuances in strategy will allow you to take into account every possible perspective to keep you grounded in real-world relevance, and on track with your business goals. Prioritize explainability. Finally, trust is key, especially if you function in regulated industries. Build transparent systems that humans can understand and control, so they are more likely to trust and embrace them in turn. It might seem too much of an anecdote or behaviour-based policy consideration, but this is an evidence-backed strategy for faster and sustainable collaboration. Which ultimately leads to more successful AI integration within the organization. At Tech360, responsibility, accountability, and transparency are at the core of innovation. We help organizations create this balance—making predictive analytics for industries practical, compliant, and ready to scale. How Tech360 Makes It Happen At Tech360, we specialize in bringing AI to life across industries—without the technical overwhelm. We combine: Industry-specific insights: We have a deep understanding of your sector’s data and regulatory needs, be it in industries like manufacturing, retail, non-profit, healthcare, or legal, etc. End-to-end implementation: From data prep and modeling to deployment and governance, we handle every step of a process implementation and look after continuous improvement. Scalability and flexibility: We empower you to handle growing data volumes, complex workflows, and real-time analytics with ease, by assuming all workforce liabilities, so you can focus only on core work output. Regulatory security: Just as importantly, we embed security-first principles- like, data encryption, access control, and compliance with global data protection standards- at every stage. Responsible AI: Every model we build ensures human oversight which, simply put, means you can trust that your AI behaves predictably, treats data securely, and avoids unintended bias. Whether you’re exploring enterprise AI adoption or ready to deploy industrial AI applications, Tech360 is your strategic partner in intelligent transformation. Lead the Future, Don’t Chase It

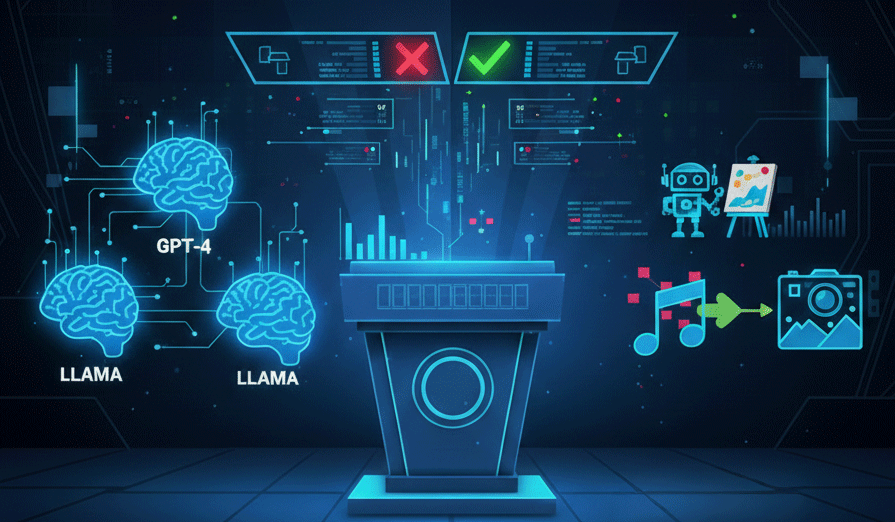

LLMs vs Generative AI: The Family Feud You Never Knew You Had to Know!

LLMs vs Generative AI: The Family Feud You Never Knew You Had to Know! AI ML October 3, 2025 Ever wake up and wonder what the difference is between a Large Language Model (LLM) and generative AI? The rest of the world probably has. But here we are. One’s a mouthful, the other’s a buzzword, both are busy behind the scenes whipping up the digital soup we’re all now spooning into our eyes and ears and inboxes. What the Heck is Generative AI? Let’s get one thing straight: generative AI is not an optimistic toaster that writes poems about bread (although, in a way, it could be). Generative AI is a catch-all name for any system that’s trained not just to recognize or sort things, but to make stuff. It can whip up new text, images, music, code, probably a festive jingle about the sadness of Mondays. If creativity can be faked, generative AI is equipped for the job. Tools like Midjourney, Stable Diffusion, and whirring robot authors everywhere – they’re all generative AI. They learn from mountains of existing data (cat pics, books, legal documents, emoji wars) and, when prodded, assemble something new-ish. Fresh output, but only as fresh as the leftovers it was trained on. So, LLMs… Are They Just Generative AI’s Kid Brother or Something? Sort of, yeah. LLMs are Large Language Models. The big beasts trained on oceans of human text. The point? To model (simulate, copy, whatever) language so well that they can autocomplete, generate responses, summarize text, sell you vitamins, pretend to be Shakespeare – the works. GPT-4, PaLM, Claude, or that chatbot that promises to fix your spelling but instead invents a recipe for pudding with tomatoes in it. All LLMs. But LLMs focus on text. They are, in AI taxonomy, a subset of generative AI: all LLMs are generative, but not all generative AI are language models. “Cat generator” isn’t a poet, but an LLM might be. The Great Divide: What Sets Them Apart? Let’s put it this way: Generative AI is everyone at the talent show. LLMs are the kid who only does spoken word and sometimes murmury monologues about spaghetti. Generative AI can create images, music, videos, tahini sauce (okay, not that), whereas LLMs are obsessed with everything textual—stories, responses, code, translation, and making sure your email’s punctuation is only slightly deranged. Generative AI: “I’ll dream up faces, melodies, and fake news articles.”LLM: “I’ll text you about it.” Under the Hood: Tech Talk Without a Degree Generative AI runs on different breeds of neural networks: GANs (Generative Adversarial Networks), VAEs (Variational Autoencoders), and when it’s feeling fancy, Transformers. LLMs, for their part, worship at the altar of Transformers—models hungry enough to read a library and then forget your birthday anyway. Both need truckloads of data—words, images, videos, tweets, angry blog comments. LLMs especially binge on text: the bigger the training set, the cleverer (and maybe weirder) the output. Use Cases: Where the Magic Actually Happens Imagine a world where marketing teams don’t have to write their own press releases and artists can make ten thousand versions of a duck with sunglasses. That’s generative AI at play: Meme generation? Sure. Audiobooks narrated by robots? Done. AI-powered design tools that solve problems nobody had? Of course. LLMs, though? They’re in your support chat, your translation app, your school essays (oh, yes they are), your code autocomplete, your next great love letter to a pizza joint. Wait, Aren’t Some LLMs Getting into Images, Too? You’re right, wise reader. Since around mid-2023, some LLMs became “multimodal” (a word that would get you extra points in Scrabble, if the AI let you cheat). Multimodal LLMs can process stuff that isn’t just words—images, audio, all sorts of ancient memes—and then spit out a summary, description, or criticism, as if they’d been writing Yelp reviews for ages. Still, LLMs are best-in-class for text; generative AI is a bigger, weirder club—admitting anyone who can generate something, even if it’s only convincing to their algorithmic moms. The Blurry Line: Why Definitions Drive Professors Mad Every so often, tech folk love a Venn diagram. They’ll show you circles for AI, generative AI, LLMs, foundation models, machine learning, and probably a doodle of a raccoon for no reason. Where do LLMs end and generative AI begin? It gets hazy. A good rule: if the AI cooks up something that wasn’t before, it’s probably generative. If it can chat, summarize, translate, or write like your neighbor after three coffees, LLMs are probably behind it. But as multimodal LLMs handle images, video, and emoji, things start overlapping like socks after laundry day. Pitfalls of Both: It’s Not All Roses Generative AI can create deepfakes, art theft, and songs by people who never existed (no, the AI wasn’t at Woodstock). LLMs can make stuff up, hallucinate facts, confidently tell you that Paris is a breakfast cereal (double-check, always). Both risk inheriting biases, misinformation, and problems baked into their training data. But that’s the fun and danger. In the right hands, these models spark innovation. In the wrong hands, chaos, confusion, and ten million self-published AI novels that sound suspiciously like instruction manuals for assembling Swedish furniture. So, What’s The Takeaway/s? So: LLM versus generative AI isn’t a winner-take-all cage match. It’s the difference between a band and the lead singer. Generative AI is everything that makes and invents; LLMs are the ones writing limericks about your socks. Next time an AI spits out a pancake recipe or two paragraphs of existential angst about shoelaces, ask yourself: is this a generalist, or a specialist? Is it the full club, or just the poet looking for applause? The lines are fuzzy, the answers mostly made up on the fly; but hey; welcome to the future! Isn’t that just fantastic?! If a toaster writes you a sonnet, congratulations: you’ve met both.